I have two advanced text replacement rules I’d like to implement in a touch keyboard layout.

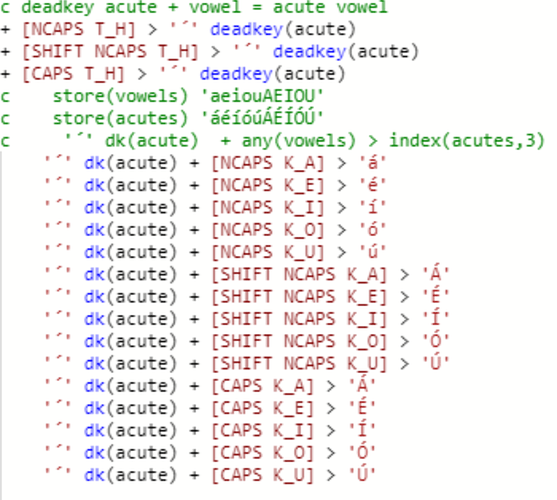

The first is that I have a deadkey for acute accent, which types an independent accent character. If it is followed by a vowel, it replaces with an acute accented vowel; if it is followed by any other keystroke (including multi-character keys), then the accent is deleted and the key types as normal.

I would like it so that if I tap acute accent followed by [T_NG], then the acute accent character would disappear and I would just get ‘nd’. This would be something like

[T_ACUTE] + any(vowels) > index(acutevowels, 2)

[T_ACUTE] + any(keys) > keystroke

Where “keystroke” is like “context”, but outputs the normal output for whatever virtual key caused it. As far as I can tell this doesn’t exist, but I am looking for a potential workaround. (If nothing else, I can fall back on either making the deadkey not give visual feedback, or to have the accent key typed after the vowel, not before)

Second:

I would like to have a key that can apply accents retroactively to vowels in a word, after it has been typed. So for example:

nihruaa [T_ACUTE] [T_GRAVE][T_NOTONE][T_ACUTE] becomes níhrùaá

I can hardcode this behavior using a lot of complex rules, but I am looking for a more concise solution.

In particular, I’m wondering if it’s possible to make an optional context element. The orthographic words in Itunyoso Triqui have a relatively constrained template of:

((C)(C)V) ((C)(C)V) ((C)(C)V) ((C)(C)(C)V(V)(n)(jh))

(Up to three (C)(C)V syllables, and a final syllable with up to three onset C’s, optional double vowel in the final syllable, an optional ‘n’, and an optional ‘j’ or ‘h’ after the last vowel)

which could be implemented easily if I could have a context store that matches either any letter or nothing (akin to a “?” in regular expressions).

Is this sort of thing possible? The brute force alternative would be to create a large number of rules for each possible configuration, so it would be very helpful!